This blog will explore how scene simulation can generate synthetic EO/IR images for various applications, including artificial intelligence/machine learning algorithm design and testing, using MuSES and CoTherm. There are many motivations for such an automated image-generation process, including the need for large sets of training images and the difficulty of acquiring a suitable measured image library with the desired variation in target, scene composition, weather conditions, etc. Training data for machine learning algorithms must represent expected real-world data and a sufficient quantity for robust learning.

The availability of properly-labeled images often limits deep learning systems for training purposes and causes inadequate variation in target aspect angle, time of day, season, etc. This problem is especially significant outside the visible waveband. Obtaining ample measured remote sensing images can be expensive, requiring time-intensive field work and post-processing. Fortunately, synthetic imagery can be an appropriate and powerful alternative.

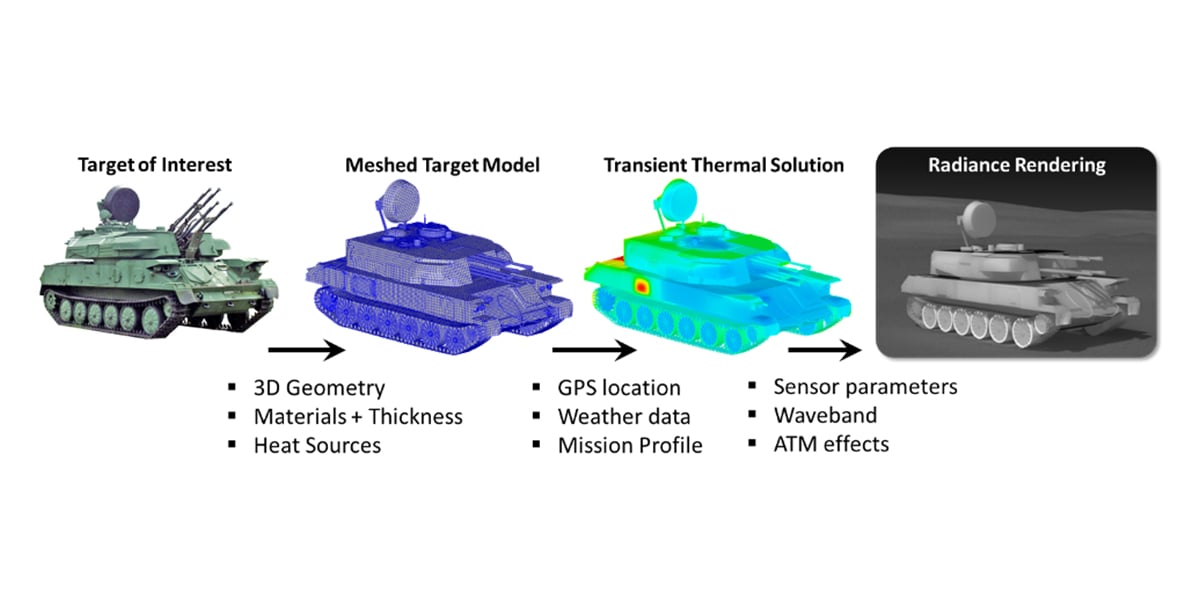

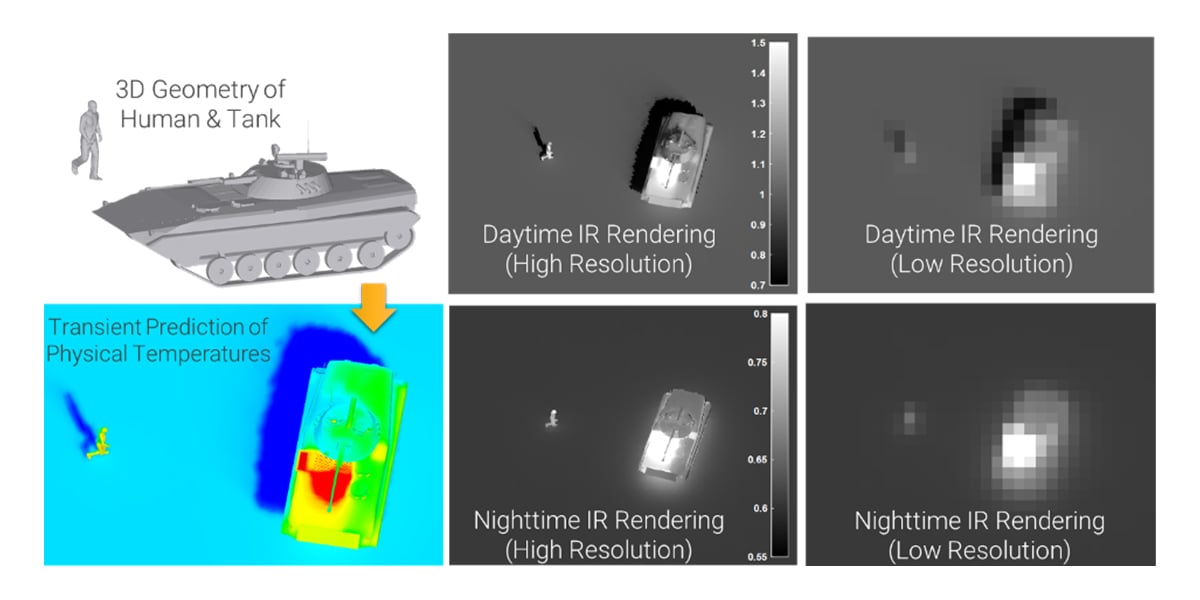

Realistic signature prediction of target/background scenes in thermal infrared wavebands depends primarily on the accurate prediction of physical temperatures. EO/IR simulation model development requires a 3D surface mesh, thermal material properties, wavelength-dependent optical surface properties, and active heat sources. Once an accurate thermal solution has been calculated using weather data and mission profiles, sensor-specific radiance images for an EO/IR spectral waveband (visible through LWIR) can be generated.

MuSES provides realistic temperature and EO/IR sensor radiance predictions based on comprehensive heat transfer calculations using high-resolution 3D geometry, component heat sources, and environmental boundary conditions. The MuSES rendering process includes thermal emissions, diffuse and directional specular reflections from environmental and illumination sources, atmospheric effects, and reflections from clutter such as trees or bushes.

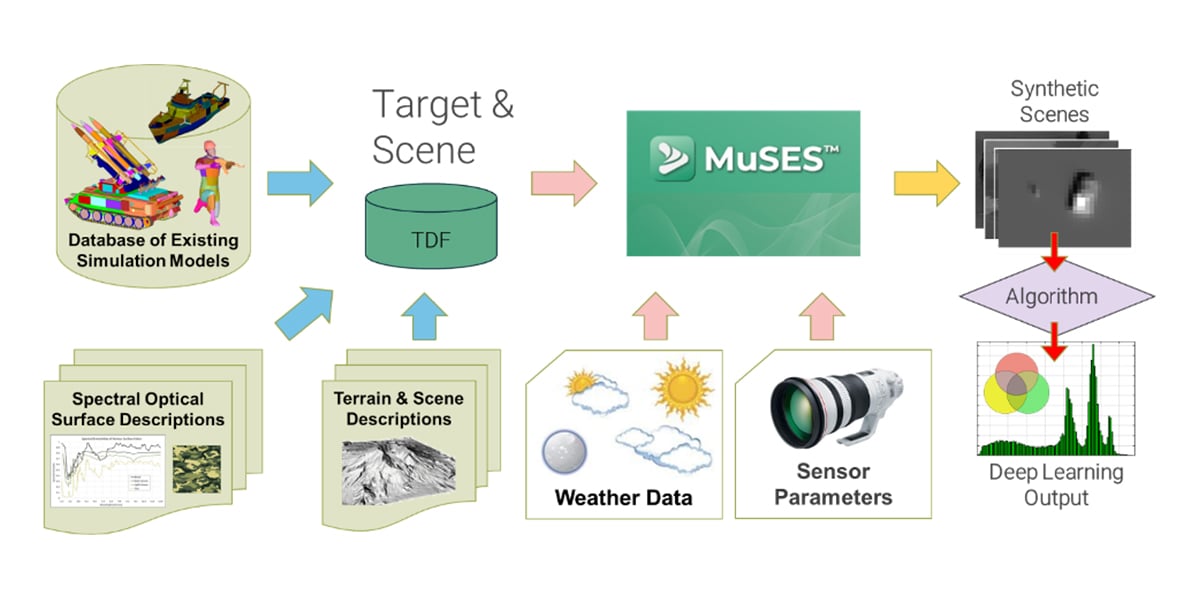

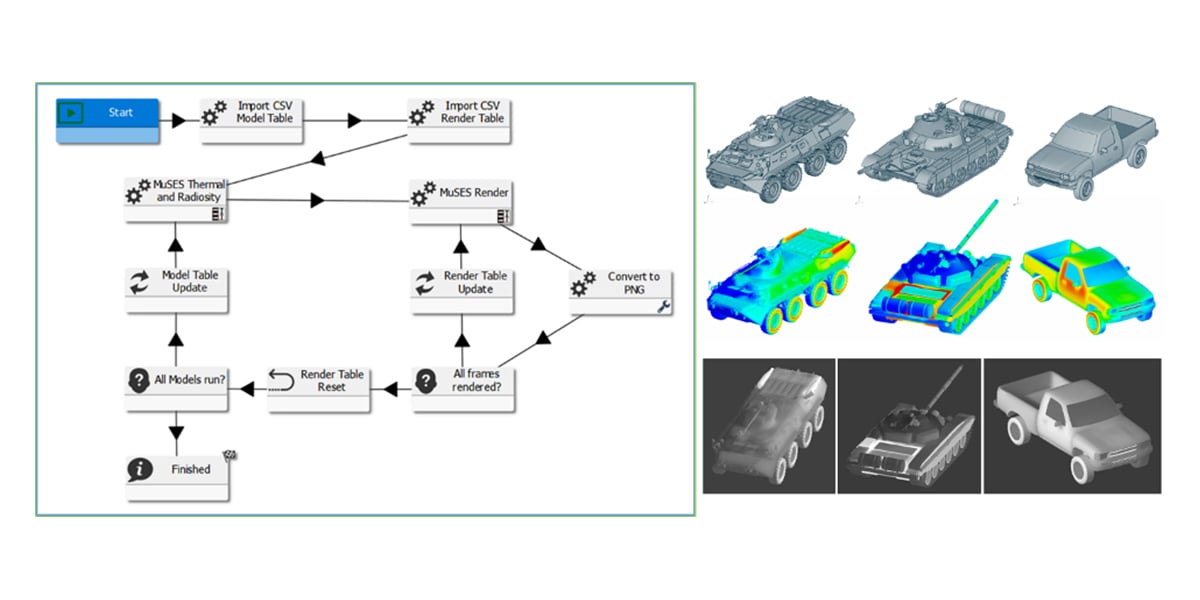

This EO/IR simulation procedure results in a sensor-specific radiance rendering of a target/background scene from a user-prescribed perspective. CoTherm provides automation capabilities to manage the scene simulation process, varying input parameters to generate the desired MuSES imagery. An efficient MuSES/CoTherm-based automated scene simulation procedure affords control over image content.

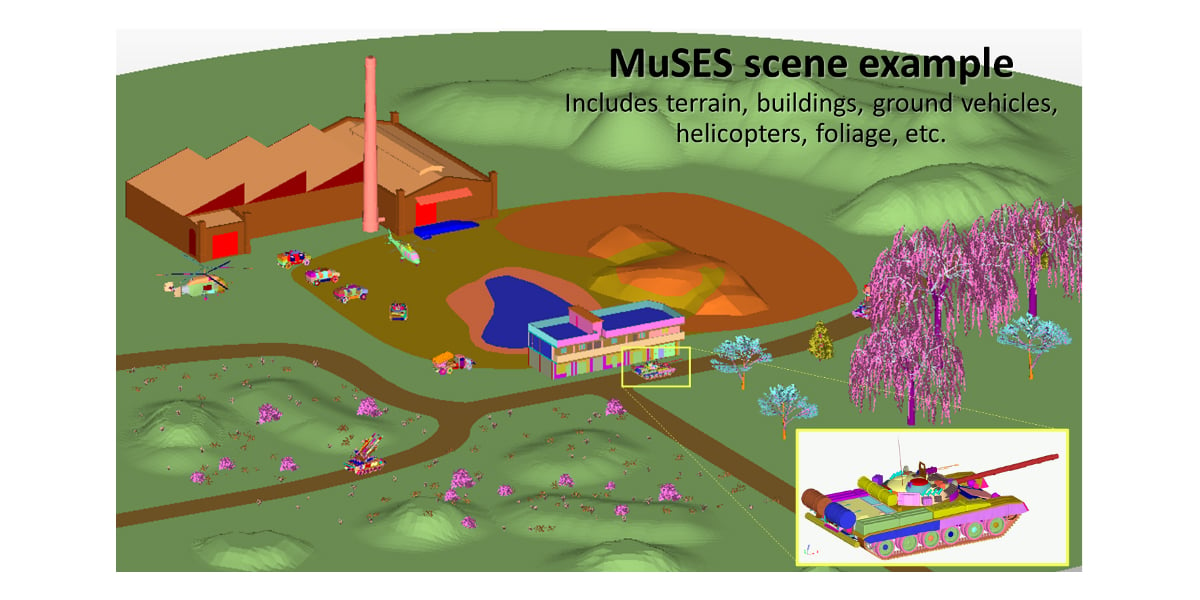

Our approach capitalizes on the scene simulation capabilities of MuSES to create a large set of training data synthetically with the desired level of detail/resolution and variation. An extensive database of 3D target models exists (including ground vehicles, maritime vessels, aircraft, human personnel, etc.) with accurate geometric features, optical surface properties, and internal heat sources (e.g., engine, exhaust, and electronics).

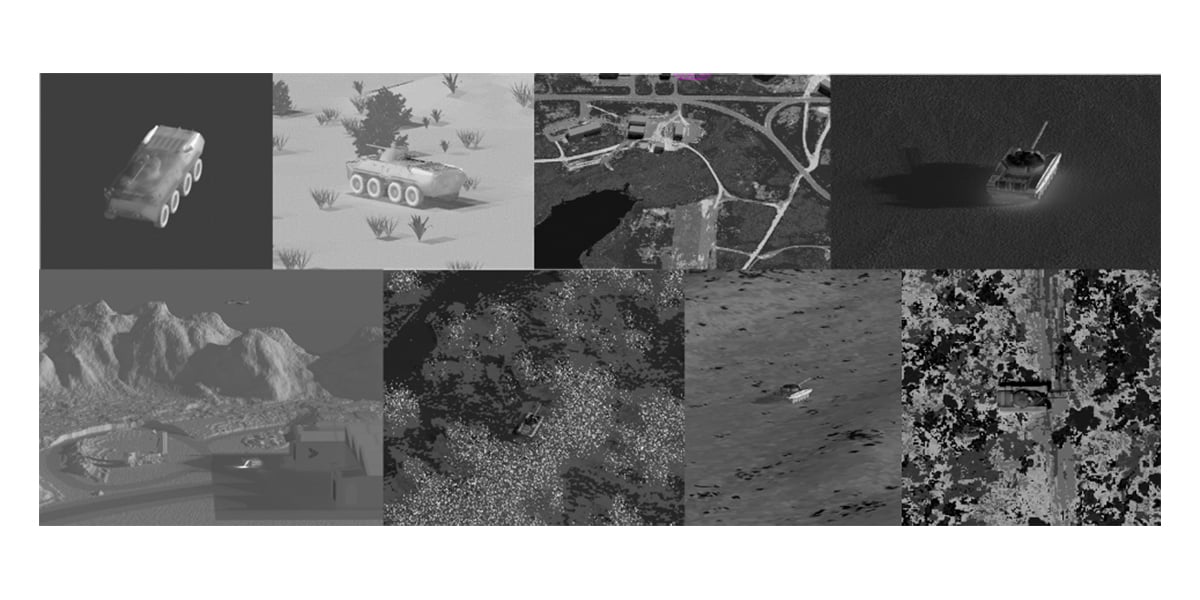

These target models can be combined with various types of terrains or other backgrounds, as well as clutter elements like trees and bushes, to create synthetic scenes which can be ray-traced to generate radiometric images from the perspective of a virtual sensor.

CoTherm is used to automate a process that combines various target models from the database with various environmental conditions and sensor parameters to generate a large set of synthetic scene images. Rendered target images can be created at any global position for the desired season, time of day, and sensor perspective (e.g., aspect ratio). This library of rendered images is automatically classified to create labeled training data for deep learning algorithms meant to recognize and/or identify targets in scenes of interest.

This simulation strategy incorporates the following important capabilities:

- Detailed 3D finite element geometry for target vehicles and a combination of faceted and non-faceted background scenes

- Application of thermal material properties, optical surface properties, internal heat sources, and transient environmental boundary conditions for accurate transient heat transfer calculation

- Ability to incorporate effects of camouflage patterns with spatially-varying optical surface properties

- Prediction of scene radiances which combine temperature-based emissions and directional environmental reflections and illumination sources

- Inclusion of atmospheric impacts such as extinction (due to absorption and scattering) and path radiance

- Ability to specify detector resolution, sensor-target slant range, the field of view, sensor perspective, object heading, etc., to increase the robustness of training procedures

- Inclusion of optical sensor system effects (blurring and noise) for more realistic training data

- Ability to automate these capabilities in an efficient process

In conclusion, acquiring sufficient measured training data for machine learning algorithms can be challenging, but synthetic imagery can be generated as an effective alternative. Scene variables such as target resolution and aspect ratio, background details, sensor parameters and location, season, and time of day can be controlled. This allows image specifics to be varied as necessary to obtain proper training data for peak algorithm performance. Simulation methodologies are becoming more advanced, allowing us to get closer to the ideal designs we seek to provide. If you are interested in learning more about how the scene simulation capabilities of MuSES can help your team, please feel free to request a live demo of our software.

Visit our website at suppport.thermoanalytics.com for

- FAQs

- Webinars

- Tutorials

Get help from our technical support team:

Watch our High-Fidelity EO/IR Scene Simulation with MuSES and DIRSIG webinar as we demonstrate how sophisticated scene simulation with large, complex backgrounds and high-resolution target models can be achieved using MuSES and DIRSIG together.

MuSES focuses on transient signature analysis to deliver answers faster without sacrificing accuracy. Reduce your production time while providing optimized designs.

Curious about MuSES' capabilities? Watch this.

Thermal and EO/IR Signature simulation software that is incredibly fast without sacrificing accuracy.