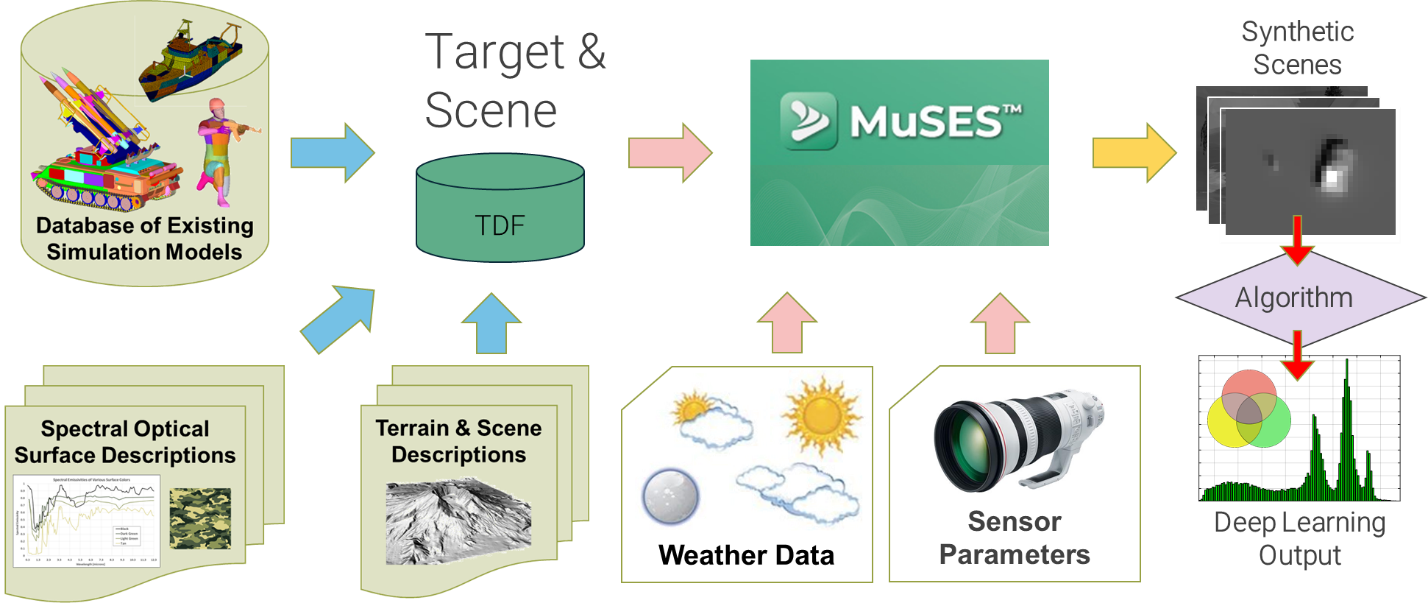

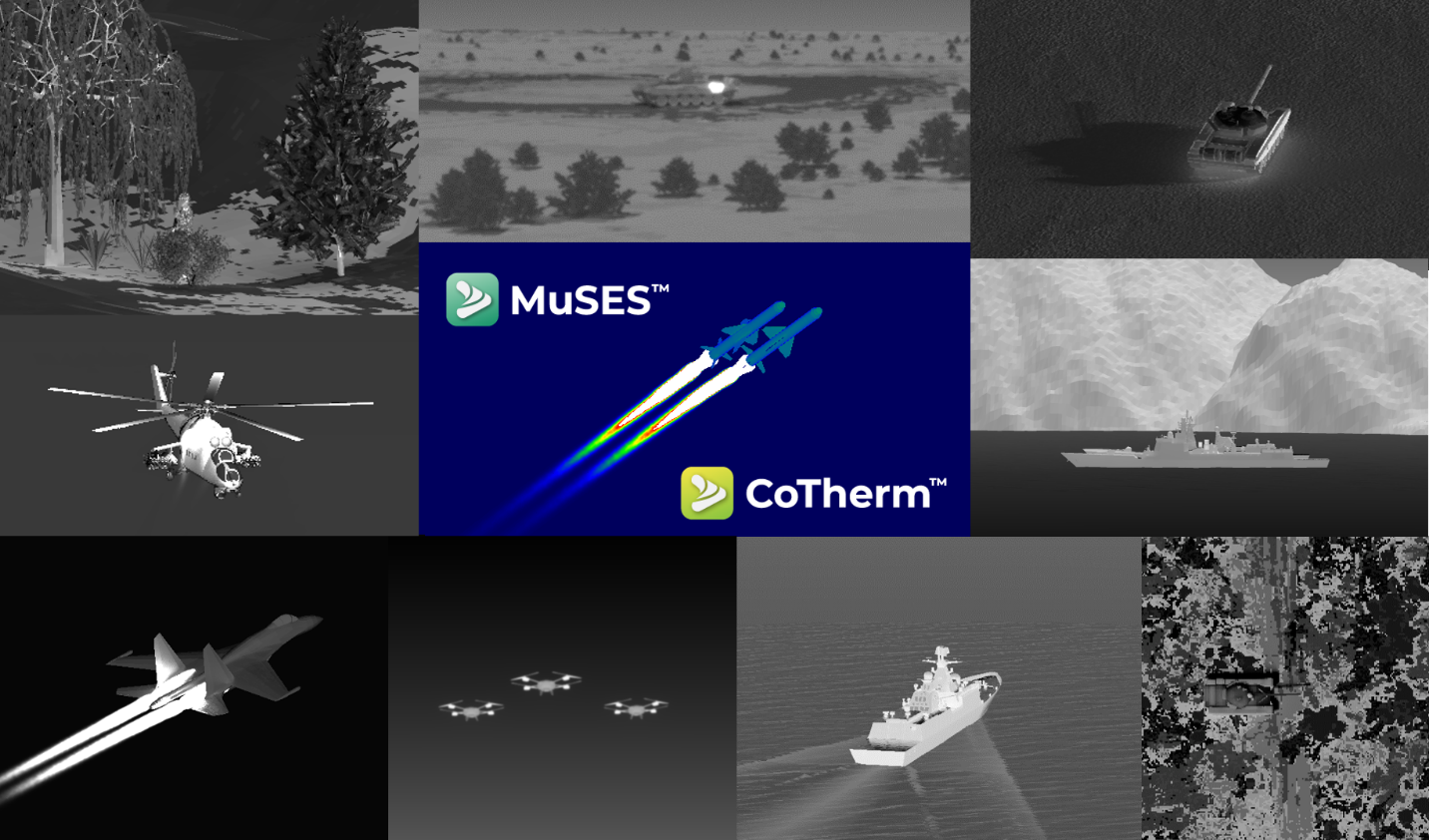

This blog will explore how scene simulation performed with MuSES™ and CoTherm™ can be used to generate synthetic EO/IR images for a variety of applications, including training datasets for artificial intelligence/machine learning algorithms. There are many motivations for such an automated image-generation process, including the need for large sets of training images and the difficulty of acquiring a suitable measured image library with the desired variation in target, scene composition, weather conditions, etc. Training data for machine learning algorithms must be representative of the detail and variation expected in real-world data and of a sufficient quantity for robust learning to ensue. Deep learning systems are often limited by the availability of properly labeled images for training purposes and inadequate variation in target aspect angle, time of day, season, etc. This problem is especially significant outside the visible spectrum, as thermal infrared images are typically much less available. Obtaining ample measured remote sensing images can be expensive, requiring time-intensive field tests and subsequent data post-processing. Fortunately, MuSES-based synthetic imagery can be an appropriate and powerful alternative.

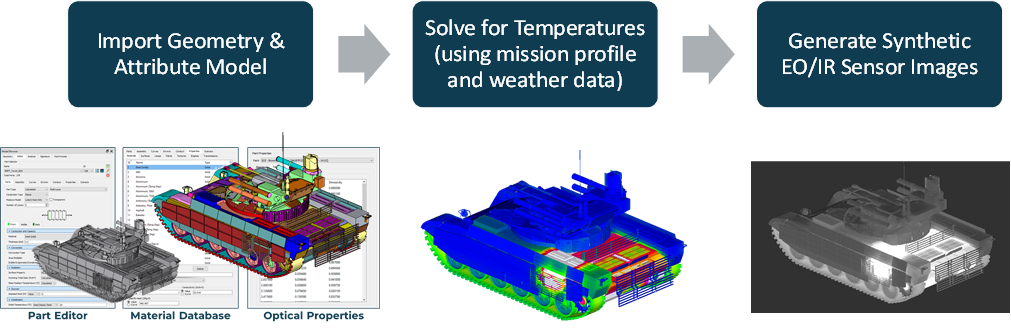

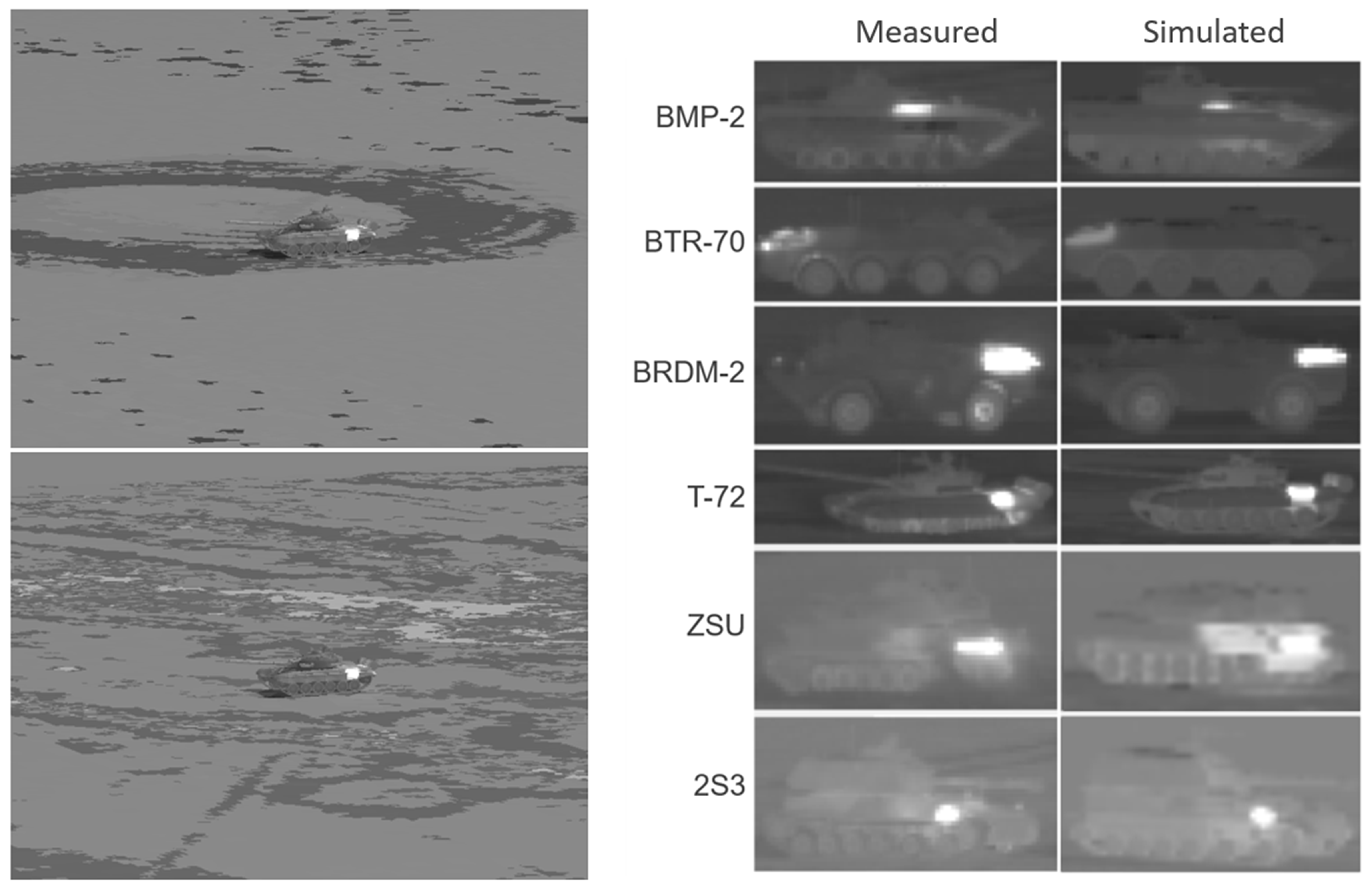

Realistic signature prediction of target/background scenes in thermal infrared wavebands (e.g., MWIR and LWIR) depends primarily on accurately predicting physical temperatures. EO/IR simulation model development requires a 3D surface mesh, thermal material properties, wavelength-dependent optical surface properties, and active heat sources. Once an accurate thermal solution has been calculated using weather data and mission profiles, sensor-specific radiance images can be generated for an EO/IR spectral waveband (visible through LWIR, so 0.4-20 microns).

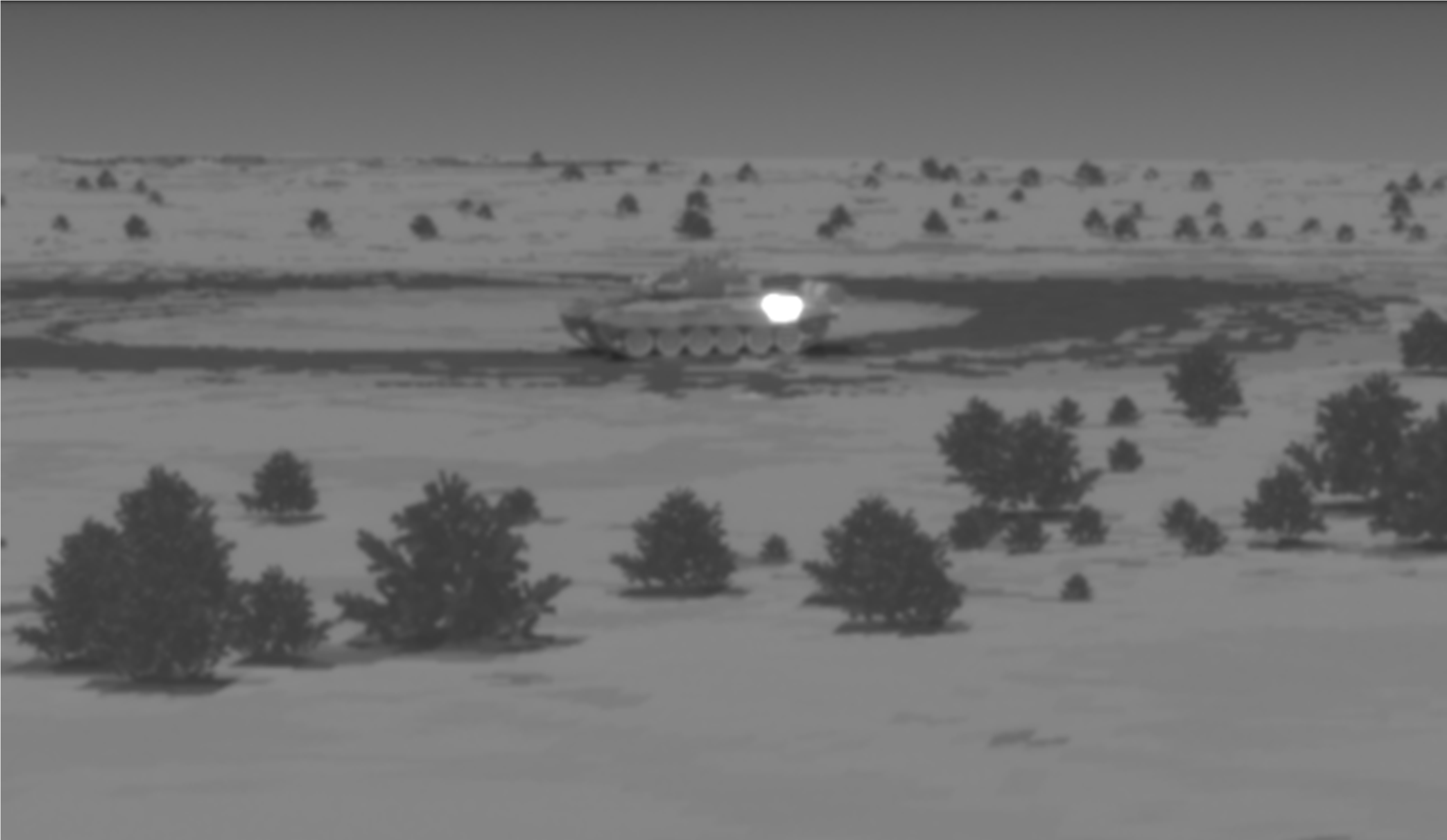

MuSES™ provides realistic temperature and EO/IR sensor radiance predictions based on comprehensive heat transfer calculations using high-resolution 3D geometry, component heat sources, and environmental boundary conditions. The MuSES-based rendering process includes thermal emissions, diffuse and directional specular reflections from environmental and illumination sources, atmospheric effects, and reflections from surrounding clutter discretes such as trees or bushes. The result of this high-fidelity EO/IR simulation procedure is a radiance rendering of a target/background scene from the perspective of a user-prescribed virtual sensor.

CoTherm™ provides automation capabilities for managing the scene simulation process, allowing users to manipulate input parameters to generate MuSES™ imagery with the desired variations. An efficient MuSES/CoTherm-based automated scene simulation procedure affords control over the resultant synthetic image content. A large database of 3D target models exists (including ground vehicles, maritime vessels, manned and autonomous rotary and fixed-wing aircraft, human personnel, etc.), which contains accurate geometric features, optical surface properties, and internal heat sources (e.g., engine, exhaust, and electronics). These target models can be combined with various types of terrains or other backgrounds, as well as clutter elements like trees and bushes, to create synthetic scenes that can be ray-traced to generate radiometric images from the perspective of a virtual sensor. CoTherm™ is used to employ a range of environmental conditions, sensor parameters, and other variables to generate a diverse synthetic training image set. This rendered image library is automatically classified to create labeled training data to teach deep learning algorithms how to recognize and/or identify targets in scenes of interest.

This simulation strategy incorporates the following important capabilities:

- Detailed 3D finite element geometry for high-value targets and digital elevation map-based background scenes

- Application of thermal material properties, optical surface properties, internal heat sources, and transient environmental boundary conditions for accurate transient mission-specific heat transfer calculation

- Ability to incorporate effects of camouflage patterns with spatially varying (spectral) optical surface properties

- Prediction of scene radiances, which combine temperature-based emissions and directional environmental reflections and illumination sources

- Inclusion of atmospheric impacts such as extinction (due to absorption and scattering) and path radiance

- Ability to specify detector resolution, sensor-target slant range, the field of view, sensor perspective, target heading, etc. to increase the robustness of training procedures

- Inclusion of optical sensor system effects (blurring and noise) for training data more representative of real-world measured images

- Ability to automate these capabilities in an efficient, documented process

In conclusion, acquiring sufficient measured thermal infrared training data for machine learning algorithms can be challenging, but synthetic imagery is an effective alternative. Simulation models can generate radiance predictions that closely match measured imagery while allowing analysts control over scene variables such as target resolution and aspect ratio, target mission profile, background details, sensor characteristics, sensor platform location/motion, season, and time of day. This allows training imagery to be as varied as necessary to obtain appropriate datasets for robust algorithm performance. We have demonstrated this process and resultant algorithm performance (using MuSES-based synthetic imagery) in several recent publications; please check out the links below and contact us for more information!

“Sensitivity analysis of ResNet-based automatic target recognition performance using MuSES-generated EO/IR synthetic imagery” (2023) can be found at https://doi.org/10.1117/12.2663571

MuSES allows you to simulate any environment or condition and generate accurate signature predictions. It provides many powerful features that allow you to model plumes, wakes, humans, atmospheric effects, and much more. Synthetic targets can be embedded into measured or modeled scenes and coupled with simulation tools like DIRSIG. We maintain an extensive library of target models, surface property measurements, weather files, and location data to populate rendered image datasets that allow your engineers and scientists their desired level of detail and variation.

Power up your team's ability to predict detection and customize your software toolchains to generate large numbers of images for algorithm training. Identification-qualified organizations are invited to contact an EO scientist to review your application and determine how MuSES and our engineering team can accelerate your AI development and expand your training image database.

If you are interested in learning more about how the scene simulation capabilities of MuSES can help your team, please feel free to request a live demo of our software.

Visit our website at suppport.thermoanalytics.com for

- FAQs

- Webinars

- Tutorials

Get help from our technical support team:

Webinars:

Simulating the Thermal Impact of a High-Energy Laser on a Battery-Powered UAV with MuSES Webinar

High-Fidelity EO-IR Scene Simulation with MuSES and DIRSIG Webinar

Blogs:

MuSES + Human Thermal Extension

https://blog.thermoanalytics.com/blog/muses-human-thermal-module

EO/IR Services

https://blog.thermoanalytics.com/blog/thermoanalytics-eo-ir-services-overview

Plume Modeling

https://blog.thermoanalytics.com/blog/plume-modeling-muses

Camouflage Texture Mapping

https://blog.thermoanalytics.com/blog/camouflage-texture-mapping-muses

Webpages:

Software:

https://www.thermoanalytics.com/muses

Services:

EO/IR Image Sets

https://www.thermoanalytics.com/eo-ir-image-sets

Signature Management

https://www.thermoanalytics.com/signature-management

Video:

What is MuSES?

https://vimeo.com/manage/videos/457819919?embedded=true&source=video_title&owner=16056768